Data science ethics: Algorithmic bias + Data privacy

Lecture 12

Duke University

STA 199 - Fall 2022

10/6/22

Warm up

While you wait for class to begin…

Important

Study participation + exam extra credit opportunity:

- Optional! But the researchers would appreciate your participation!

- Go to bit.ly/sta199-learning-study-1 to fill out the survey.

- I won’t see your responses, answer freely and honestly.

- I will get a list of who filled out the survey from Duke Learning Innovations and use that to award +4 points extra credit for Exam 1.

- IRB Protocol #2022-0545.

Announcements

- HW 3 posted later today, due one week from now

- No OH over Fall break (weekend, Mon, Tue)

- Midterm evaluation - due Wed, Oct 12, 9am

From last time: ae-08

Let’s tidy up our plot a bit more!

Data privacy

“Your” data

Every time we use apps, websites, and devices, our data is being collected and used or sold to others.

More importantly, decisions are made by law enforcement, financial institutions, and governments based on data that directly affect the lives of people.

Privacy of your data

What pieces of data have you left on the internet today? Think through everything you’ve logged into, clicked on, checked in, either actively or automatically, that might be tracking you. Do you know where that data is stored? Who it can be accessed by? Whether it’s shared with others?

Sharing your data

What are you OK with sharing?

- Name

- Age

- Phone Number

- List of every video you watch

- List of every video you comment on

- How you type: speed, accuracy

- How long you spend on different content

- List of all your private messages (date, time, person sent to)

- Info about your photos (how it was taken, where it was taken (GPS), when it was taken)

What does Google think/know about you?

Have you ever thought about why you’re seeing an ad on Google? Google it! Try to figure out if you have ad personalization on and how your ads are personalized.

05:00

Your browing history

Which of the following are you OK with your browsing history to be used towards?

- For serving you targeted ads

- To score you as a candidate for a job

- To predict your race/ethnicity for voting purposes

Who else gets to use your data?

Suppose you create a profile on a social media site and share your personal information on your profile. Who else gets to use that data?

- Companies the social media company has a connection to?

- Companies the social media company sells your data to?

- Researchers?

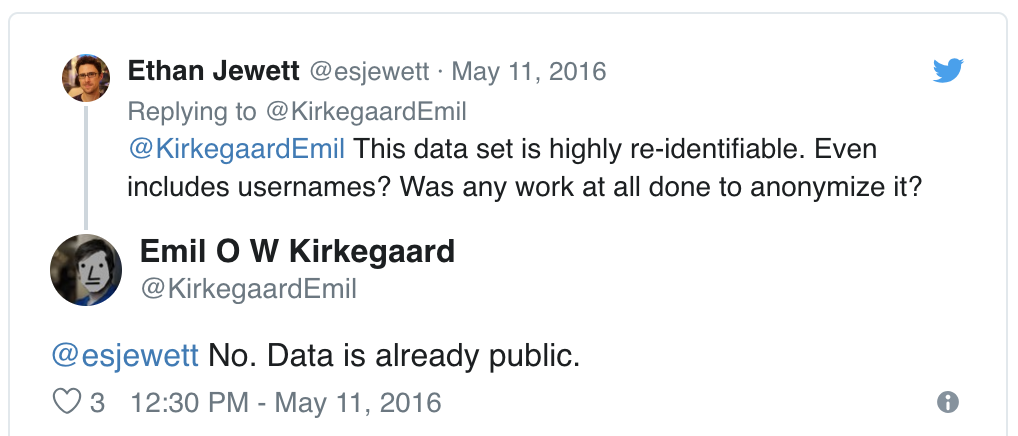

OK Cupid data breach

- In 2016, researchers published data of 70,000 OkCupid users—including usernames, political leanings, drug usage, and intimate sexual details

- Researchers didn’t release the real names and pictures of OKCupid users, but their identities could easily be uncovered from the details provided, e.g. usernames

Some may object to the ethics of gathering and releasing this data. However, all the data found in the dataset are or were already publicly available, so releasing this dataset merely presents it in a more useful form.

Researchers Emil Kirkegaard and Julius Daugbjerg Bjerrekær

Algorithmic bias

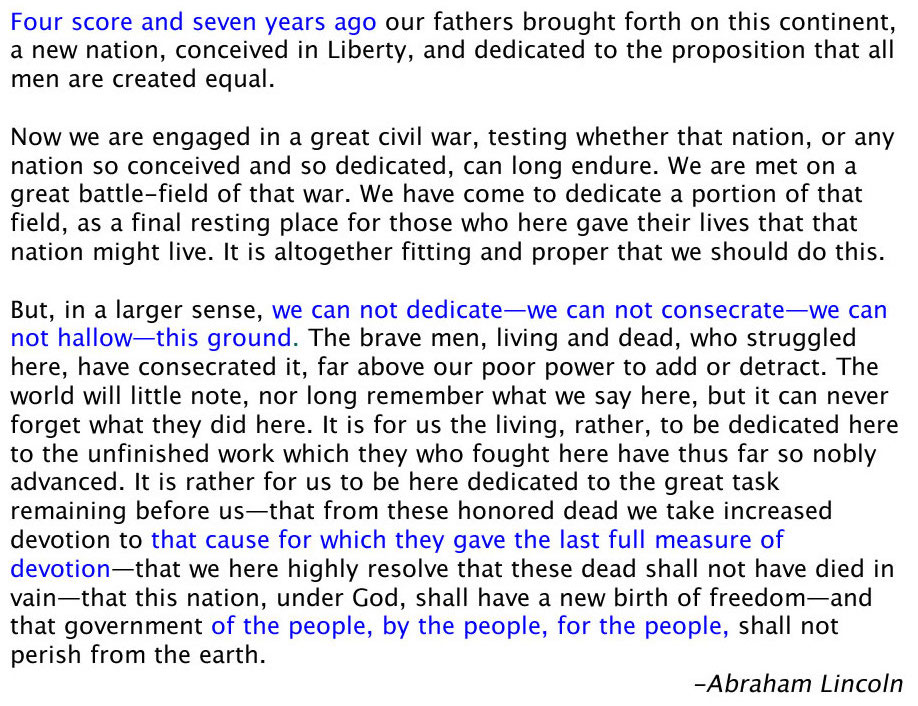

Gettysburg address

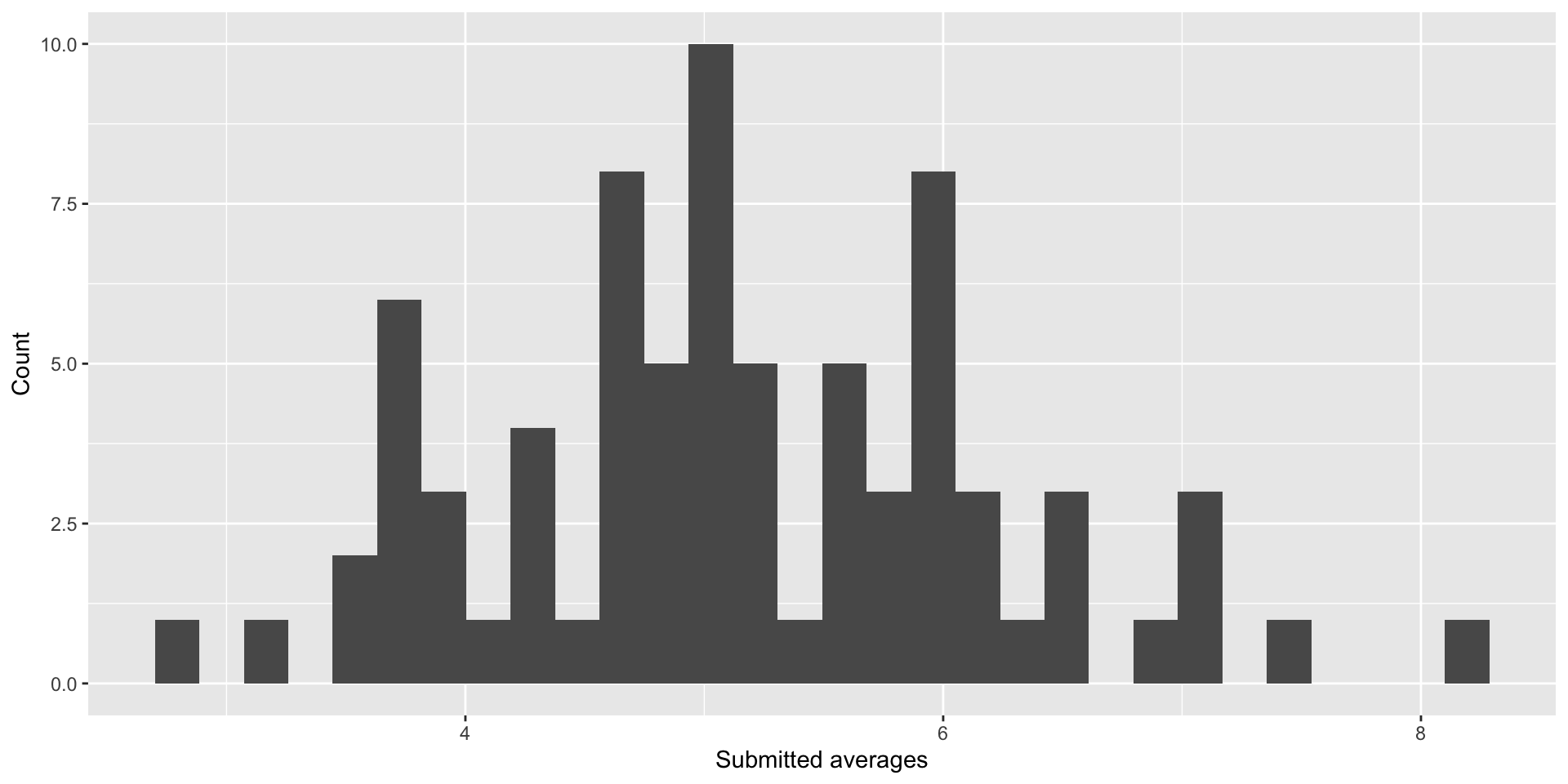

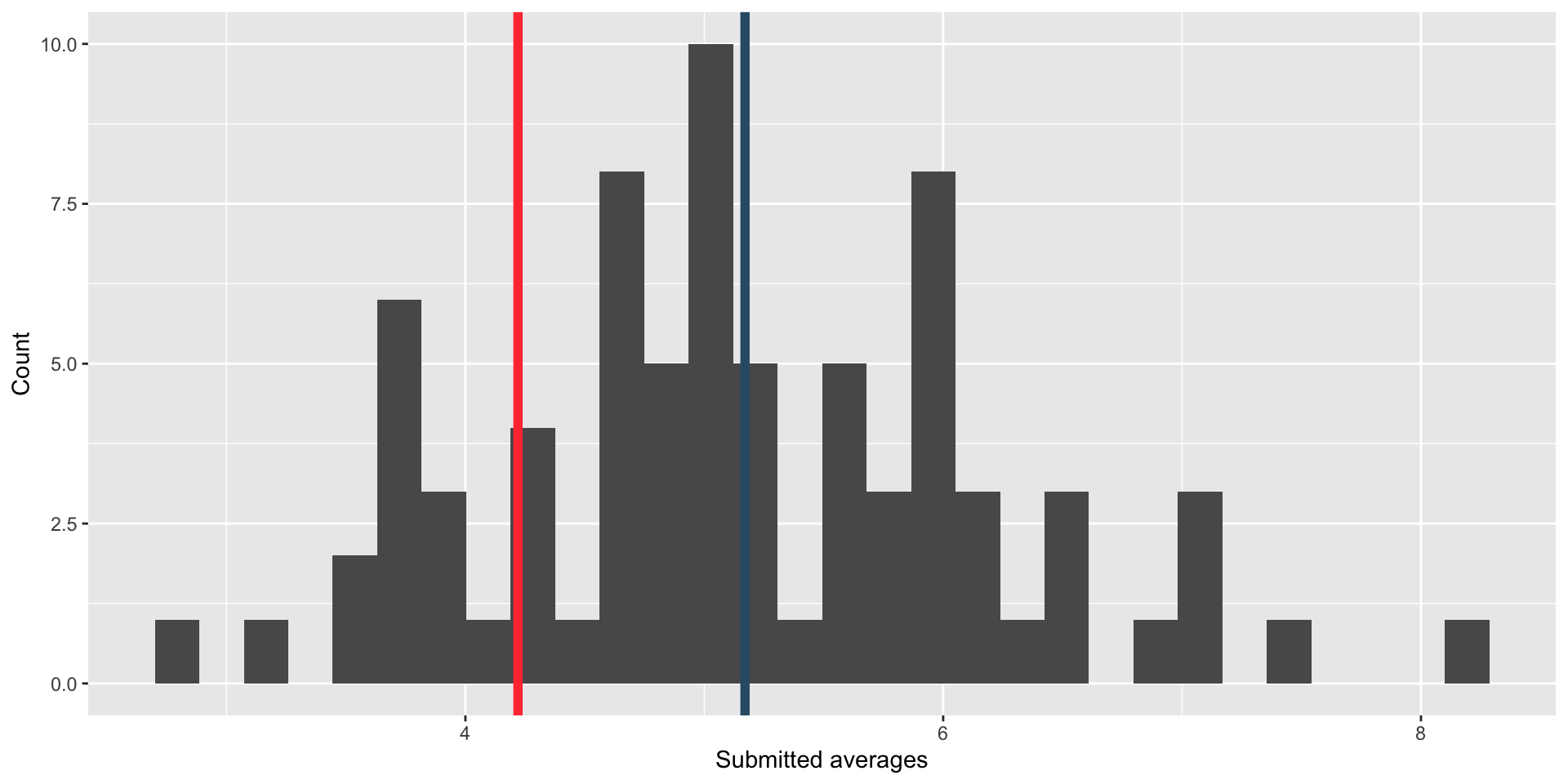

Randomly select 10 words from the Gettysburg Address and calculate the mean number of letters in these 10 words. Submit your answer at bit.ly/gburg199.

Your responses

Comparison to “truth”

Garbage in, garbage out

- In statistical modeling and inference we talk about “garbage in, garbage out” – if you don’t have good (random, representative) data, results of your analysis will not be reliable or generalizable.

- Corollary: Bias in, bias out.

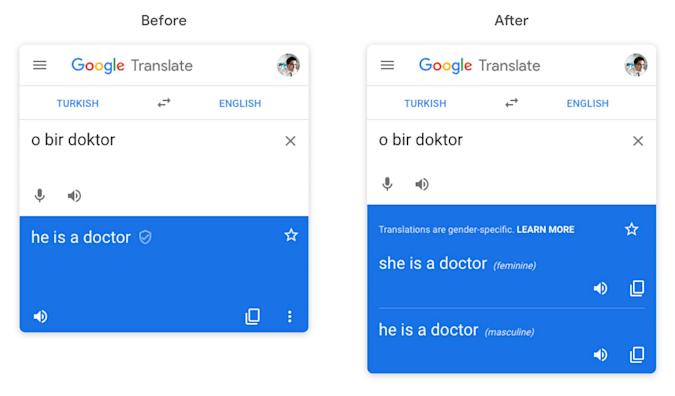

Google translate

What might be the reason for Google’s gendered translation? How do ethics play into this situation?

ae-09 - Part 1

- Go to the course GitHub org and find your

ae-09(repo name will be suffixed with your GitHub name). - Clone the repo in your container, open the Quarto document in the repo, and follow along and complete the exercises.

- Work on Part 1 - Stochastic Parrots

- Render, commit, and push your edits by the AE deadline – 3 days from today.

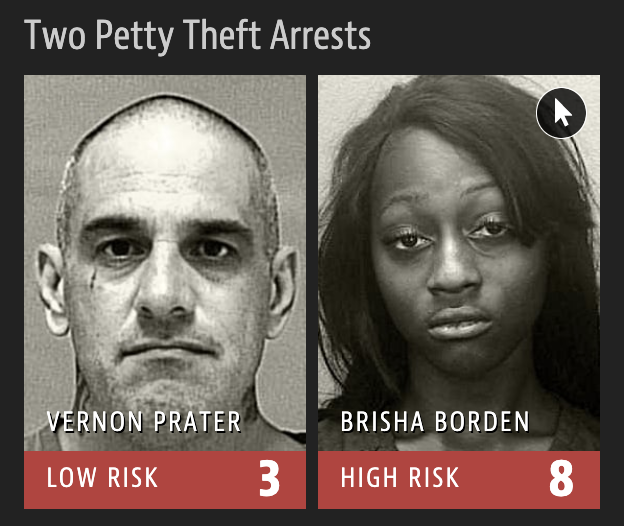

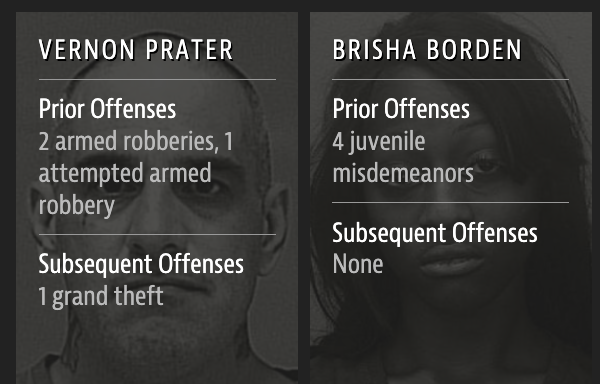

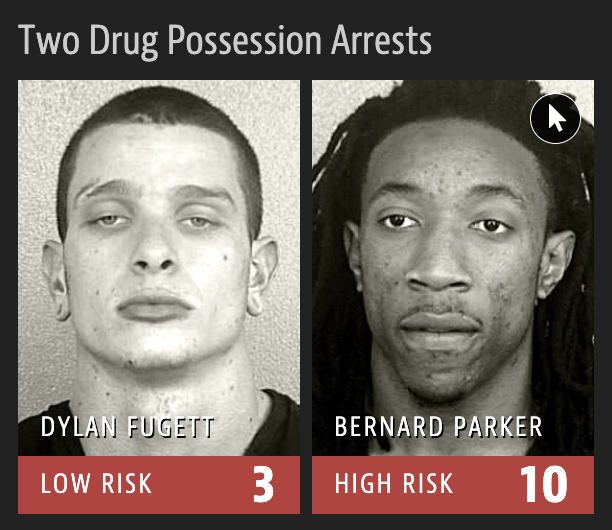

Machine Bias

2016 ProPublica article on algorithm used for rating a defendant’s risk of future crime:

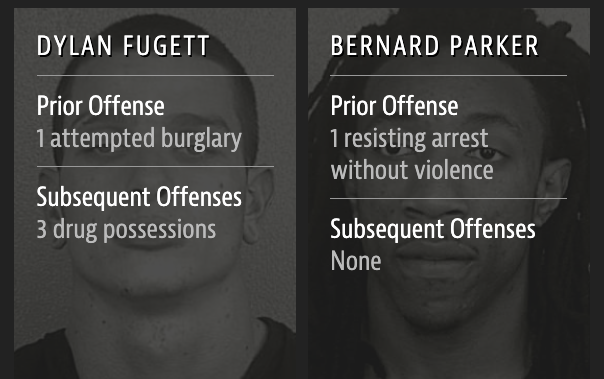

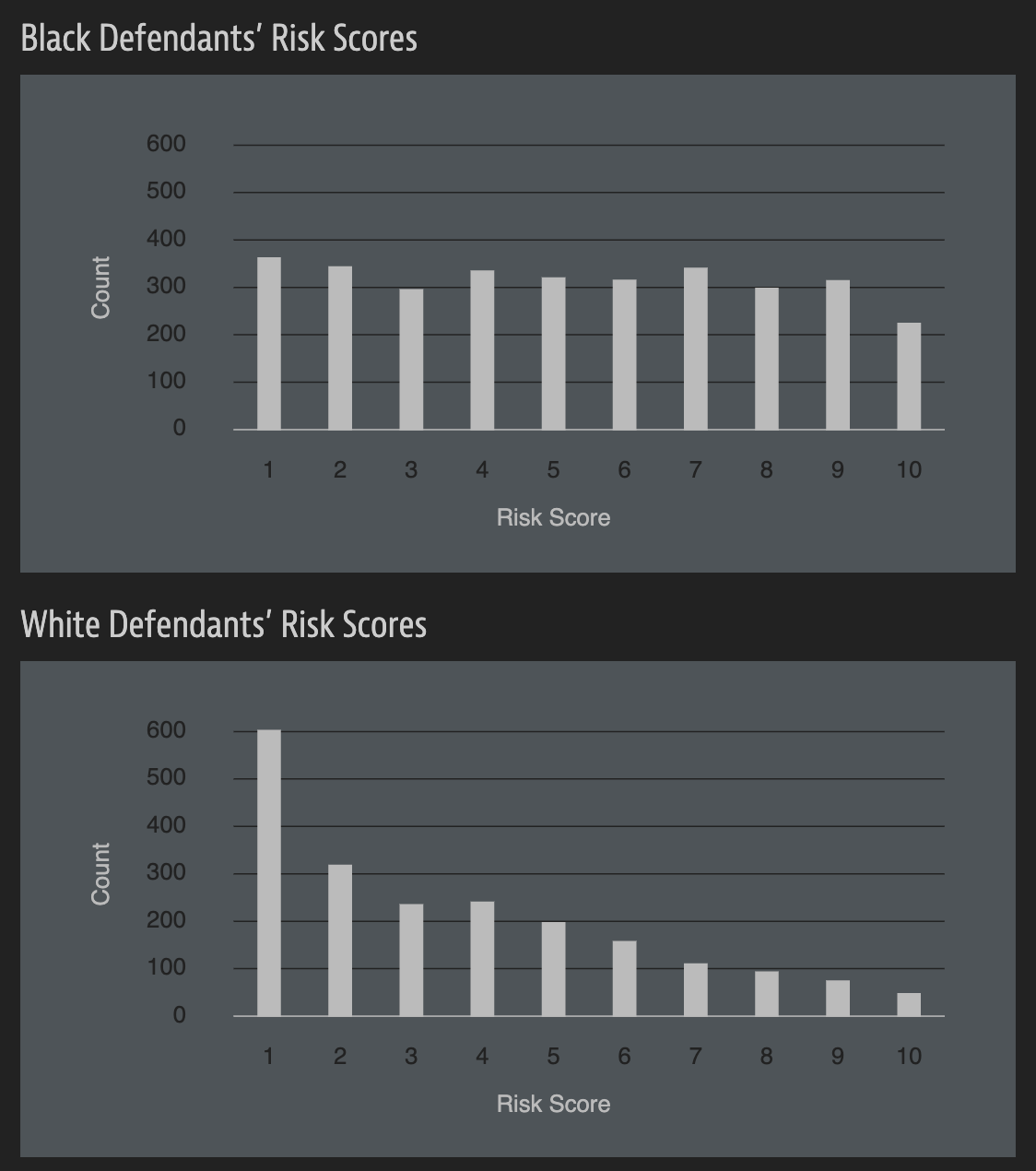

In forecasting who would re-offend, the algorithm made mistakes with black and white defendants at roughly the same rate but in very different ways.

The formula was particularly likely to falsely flag black defendants as future criminals, wrongly labeling them this way at almost twice the rate as white defendants.

White defendants were mislabeled as low risk more often than black defendants.

Risk score errors

What is common among the defendants who were assigned a high/low risk score for reoffending?

Risk scores

How can an algorithm that doesn’t use race as input data be racist?

ae-09 - Part 2

- Go to the course GitHub org and find your

ae-09(repo name will be suffixed with your GitHub name). - Clone the repo in your container, open the Quarto document in the repo, and follow along and complete the exercises.

- Work on Part 2 - Predicting ethnicity

- Render, commit, and push your edits by the AE deadline – 3 days from today.