Web scraping

Lecture 13

Duke University

STA 199 - Fall 2022

10/13/22

Warm up

While you wait for class to begin…

- If you haven’t yet done so: Install a Chrome browser and the SelectorGadget extension:

- Clone your

ae-10project from GitHub, render your document, update your name, and commit and push.

Announcements

- Watch out for announcement on team assignments before Monday’s lab

- Start thinking about project ideas to bring to your team

- HW 3 is due at 11:59 on Thursday

Midterm feedback I

- Thank you to the 49 of you who filled it out!

- How would you change the class: Overwhelming response: More live coding

- Pace:

- 78% About right

- 6% Too fast

- 4% Too slow

- 12% No response

- Number of hours spent outside of class:

- Avg 5.5 hours (Expected ~6.25 hours)

- If you’re consistently spending less and not doing well, you should be putting more time in

- If you’re consistently spending more and feel like it’s not working, reach out for help

Midterm feedback II

- How are you learning best:

- AEs during class

- Assignments

- Videos

- TAs:

- Overwhelmingly positive!

- If you’re not making use of the TA resources, your peers think you should!

Midterm feedback III

General feedback:

Positive: Generally, people like the interactive nature of the class, the live coding aspect, and the topics

Constructive:

- Lessen overlap between prep materials – Can’t lessen since people learn differently, but I can annotate the overlap. You should also feel free to go through pieces at a faster pace if they feel redundant.

- Can be difficult to catch up on AEs if bits are missed – Ask on Slack or come by office hours, no need to wait for answer keys to be posted.

- Sometimes grading feels harsh – Ask for clarification on points taken if not provided and don’t hesitate to ask for a regrade if errors are made in grading.

- We haven’t done any statistics – All of this is (modern) statistics!

Web scraping

Scraping the web: what? why?

Increasing amount of data is available on the web

These data are provided in an unstructured format: you can always copy&paste, but it’s time-consuming and prone to errors

Web scraping is the process of extracting this information automatically and transform it into a structured dataset

Two different scenarios:

Screen scraping: extract data from source code of website, with html parser (easy) or regular expression matching (less easy).

Web APIs (application programming interface): website offers a set of structured http requests that return JSON or XML files.

Hypertext Markup Language

- Most of the data on the web is still largely available as HTML

- It is structured (hierarchical / tree based), but it’s often not available in a form useful for analysis (flat / tidy).

rvest

- The rvest package makes basic processing and manipulation of HTML data straight forward

- It’s designed to work with pipelines built with

|> - rvest.tidyverse.org

Core rvest functions

read_html()- Read HTML data from a url or character string (actually from the xml2 package, but most often used along with other rvest functions)html_element()/html_elements()- Select a specified element(s) from HTML documenthtml_table()- Parse an HTML table into a data framehtml_text()- Extract text from an elementhtml_text2()- Extract text from an element and lightly format it to match how text looks in the browserhtml_name()- Extract elements’ nameshtml_attr()/html_attrs()- Extract a single attribute or all attributes

Application exercise

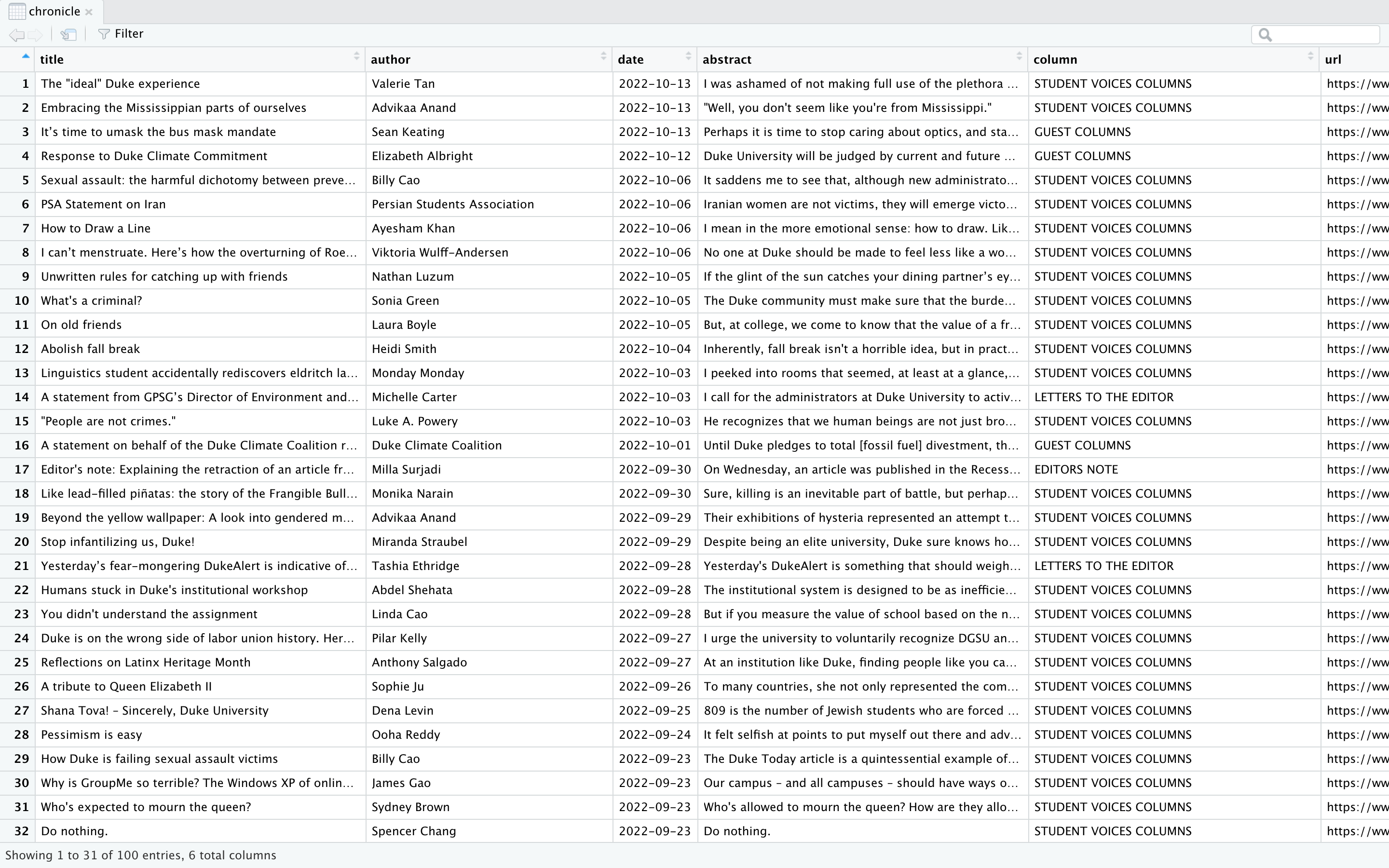

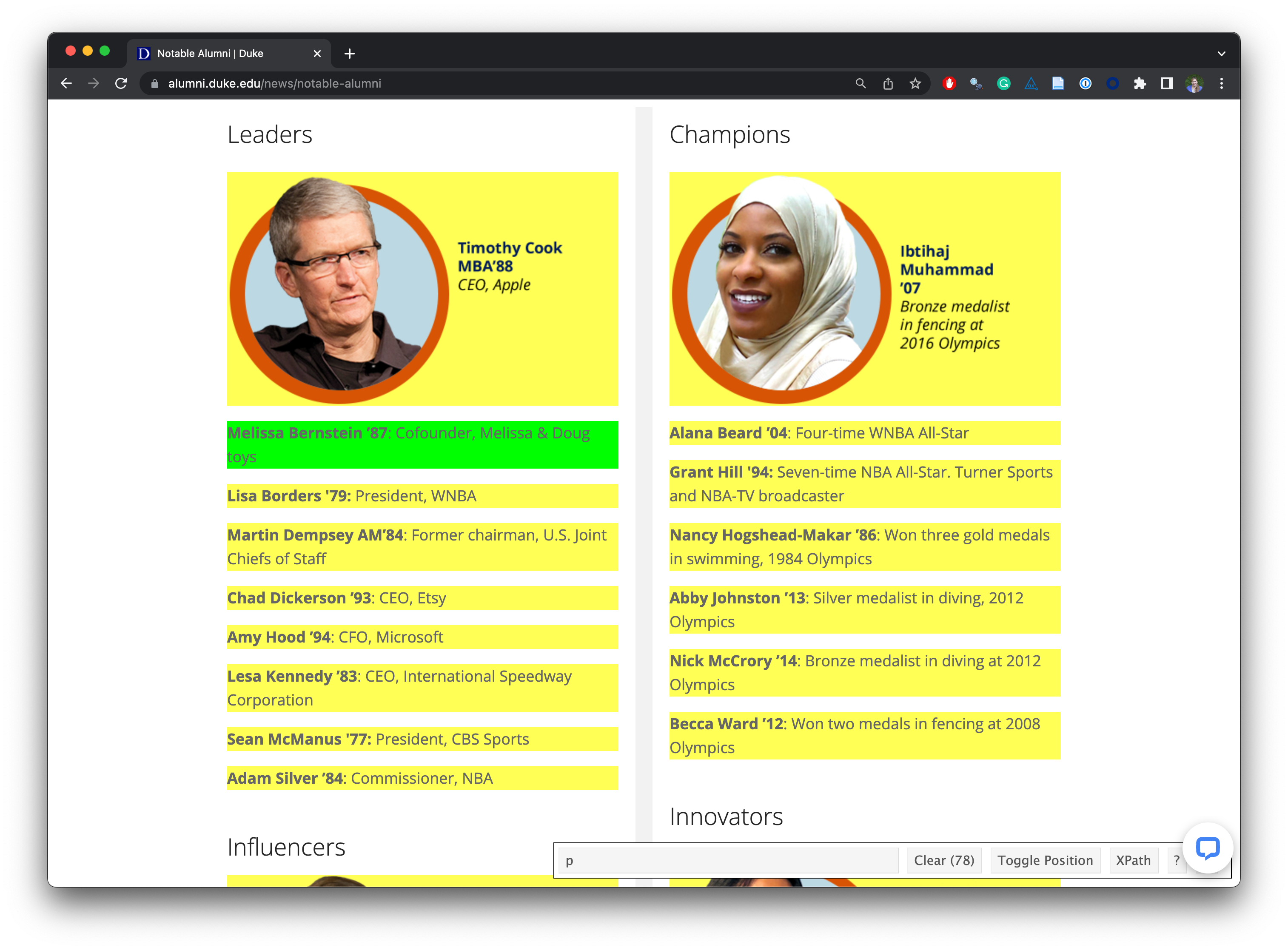

Opinion articles in The Chronicle

- Go to https://www.dukechronicle.com/section/opinion

- Scroll to the bottom and choose page 1

- How many articles are on the page?

- Take a look at the URL. How can you change the number of articles displayed by modifying the URL? Try displaying 100 articles.

Goal

- Scrape data and organize it in a tidy format in R

- Perform light text parsing to clean data

- Summarize and visualze the data

ae-10

- Go to the course GitHub org and find your

ae-10(repo name will be suffixed with your GitHub name). - Clone the repo in your container, open the Quarto document in the repo, and follow along and complete the exercises.

- Render, commit, and push your edits by the AE deadline – 3 days from today.

Recap

- Use the SelectorGadget identify tags for elements you want to grab

- Use rvest to first read the whole page (into R) and then parse the object you’ve read in to the elements you’re interested in

- Put the components together in a data frame (a tibble) and analyze it like you analyze any other data

A new R workflow

When working in a Quarto document, your analysis is re-run each time you knit

If web scraping in a Quarto document, you’d be re-scraping the data each time you knit, which is undesirable (and not nice)!

An alternative workflow:

- Use an R script to save your code

- Saving interim data scraped using the code in the script as CSV or RDS files

- Use the saved data in your analysis in your Quarto document

Web scraping considerations

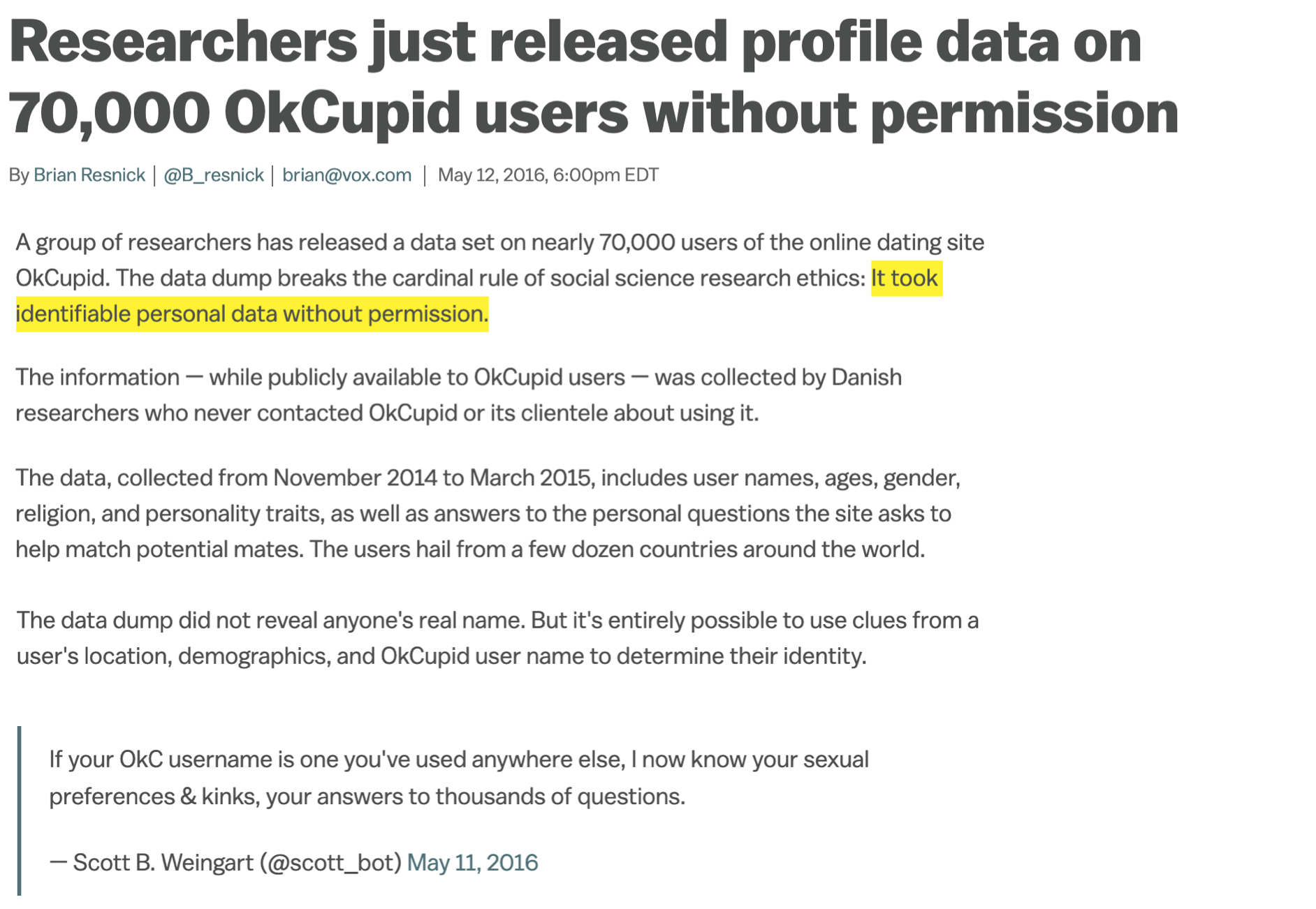

Ethics: “Can you?” vs “Should you?”

“Can you?” vs “Should you?”

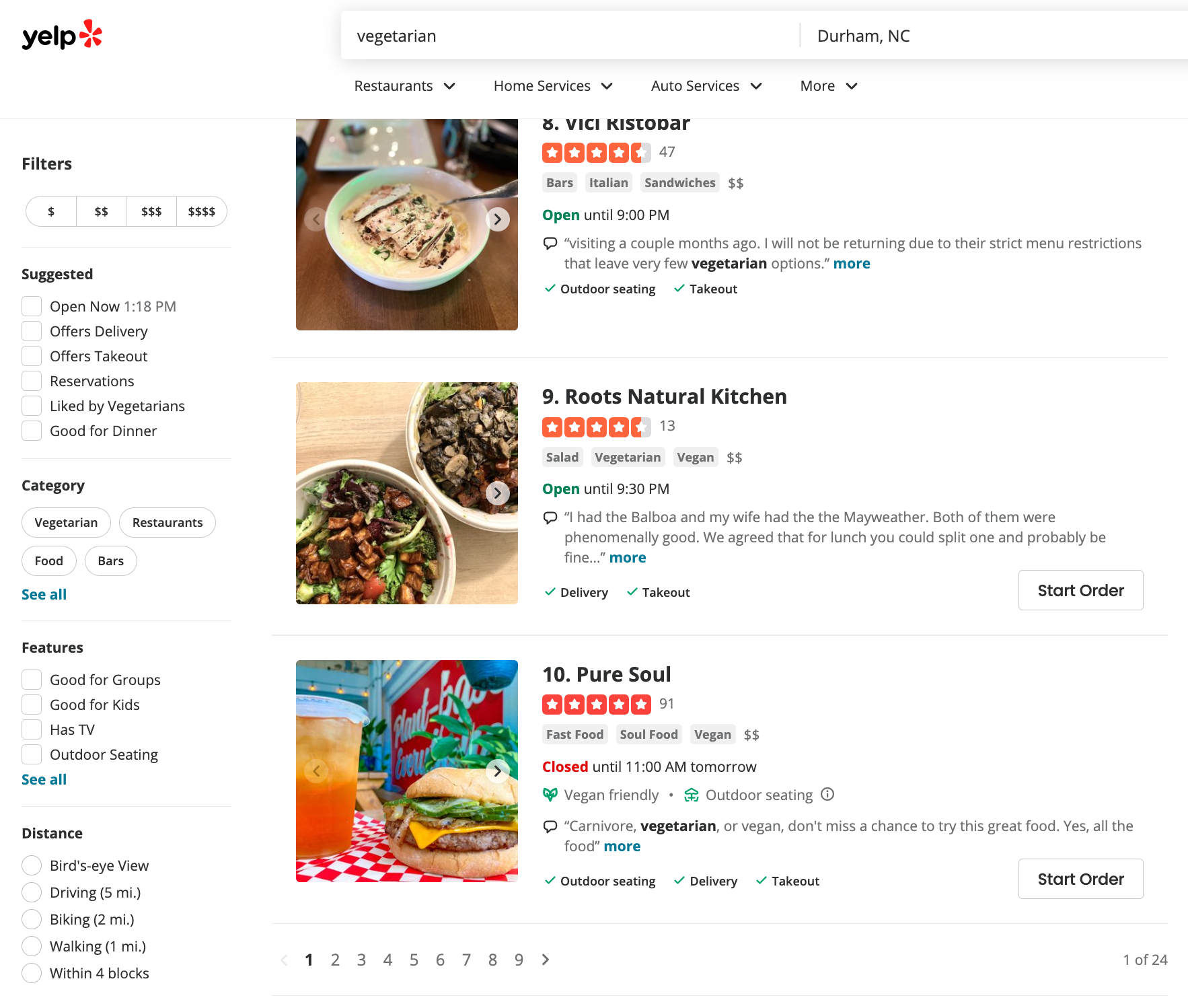

Challenges: Unreliable formatting

Challenges: Data broken into many pages

Workflow: Screen scraping vs. APIs

Two different scenarios for web scraping:

Screen scraping: extract data from source code of website, with html parser (easy) or regular expression matching (less easy)

Web APIs (application programming interface): website offers a set of structured http requests that return JSON or XML files